Laypeople Annotation of Terminology

Source: This example was kindly contributed by Anna Hätty, Robert Bosch GmbH and Institute for Natural Language Proessing (IMS), University of Stuttgart, Germany

The aim of this study is to investigate how strongly people inherently agree on terminological expressions. Given that even experts vary significantly in their understanding of termhood, we let laypeople annotate single-word and multi-word terms, across four domains and across four task definitions.

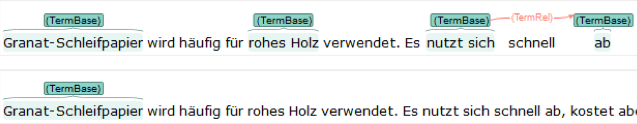

We allowed two kinds of annotations: “TermBase” to annotate adjacent spans of words. “TermRel” allows for connecting “TermBase” annotations, if parts of an MWE were separated (e.g. the particle of a particle verb).

The data for term identification comprise German open-source texts from the websites wikiHow, Wikibooks and Wikipedia. In total, the text basis consists of 20 texts (five per domain) with 5 sentences each. All texts together contain 3,075 words, distributed over the following four domains:

- diy: “do it yourself” (708 words)

- cooking (624 words)

- hunting (900 words)

- chess (843 words)

20 annotators were asked to perform only one of the identification tasks, which resulted in five annotations per task. In addition, we asked two annotators to perform all four tasks, to check whether the inter-annotator agreement differs in the two setups.